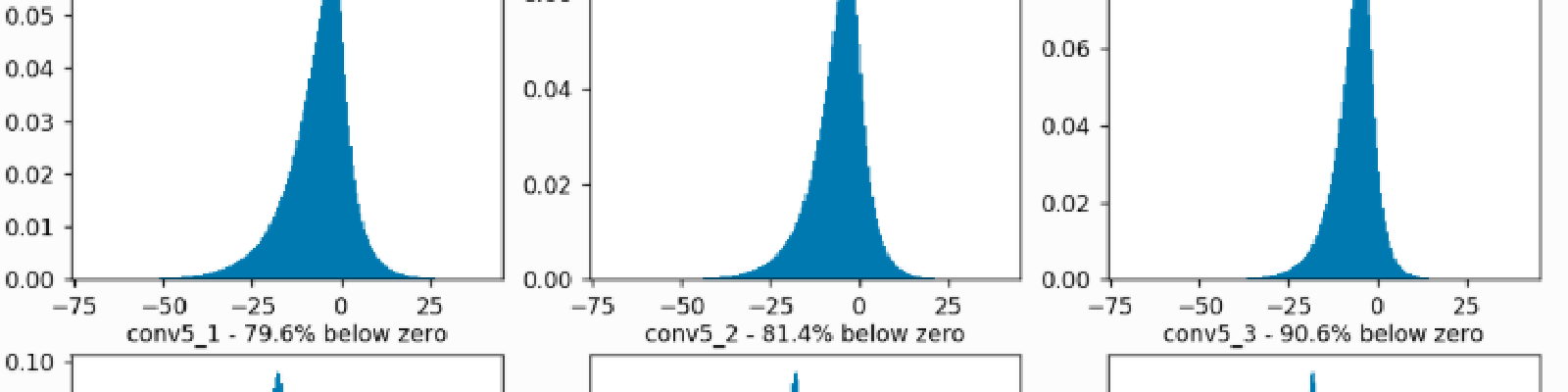

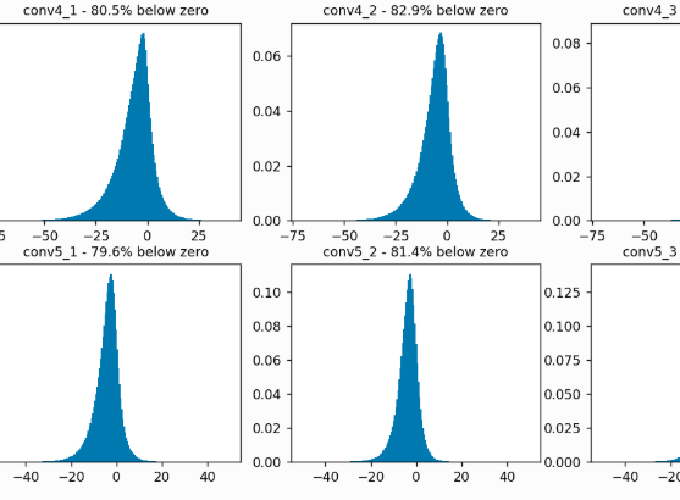

In this notebook I look at the activations for VGG16 and ResNet-18 and see how much work the ReLU is doing between convolutional layers. The inspiration being comes from an intuition that the ReLU is an important nonlinearity that sparsifies the activations of a deep network. Does having lots of zeros in activations help the neural network learn?

On top of this, the recent trend in CNNs has been to use smaller and smaller kernel sizes - with 3 by 3 being the standard nowadays. If the ReLU wasn’t there, then it would be possible to combine successive convolutional layers into 1 larger layer (although not necessarily faster). Then this would give us a hint at to whether that is just a product of being quicker or whether linearity is to be avoided.

Check it out here.